Testing AI Vision's Understanding of High Fashion Nuances

The first comprehensive evaluation of vision models for fashion intelligence

The first comprehensive evaluation of vision models for fashion intelligence

We present the first comprehensive benchmark evaluating vision models for fashion intelligence, testing CLIP, SigLIP, and DINOv2 on 12,147 Rick Owens runway images across 23 years of collections. Through 3.66 million image comparisons across three evaluation frameworks—impostor detection, collection cohesion, and exact matching—we demonstrate that SigLIP achieves superior fashion intelligence with +0.079 positive uncertainty detection gap and 63.5% collection purity, making it the only model suitable for production fashion AI systems despite 9.6x processing cost.

Key Finding

SigLIP is the only model with proper uncertainty detection, achieving +0.079 positive noise gap while CLIP (-0.015) and DINOv2 (-0.070) show dangerous overconfidence.

We evaluated three state-of-the-art vision models representing different architectural approaches to multimodal understanding:

SigLIP (google/siglip-so400m-patch14-384): Google's enhanced CLIP variant with sigmoid loss, 1152-dimensional embeddings, 400M parameters.

CLIP (openai/clip-vit-base-patch32): OpenAI's original contrastive model, 512-dimensional embeddings.

DINOv2 (facebookresearch/dinov2_vitb14): Meta's self-supervised vision model, 768-dimensional embeddings.

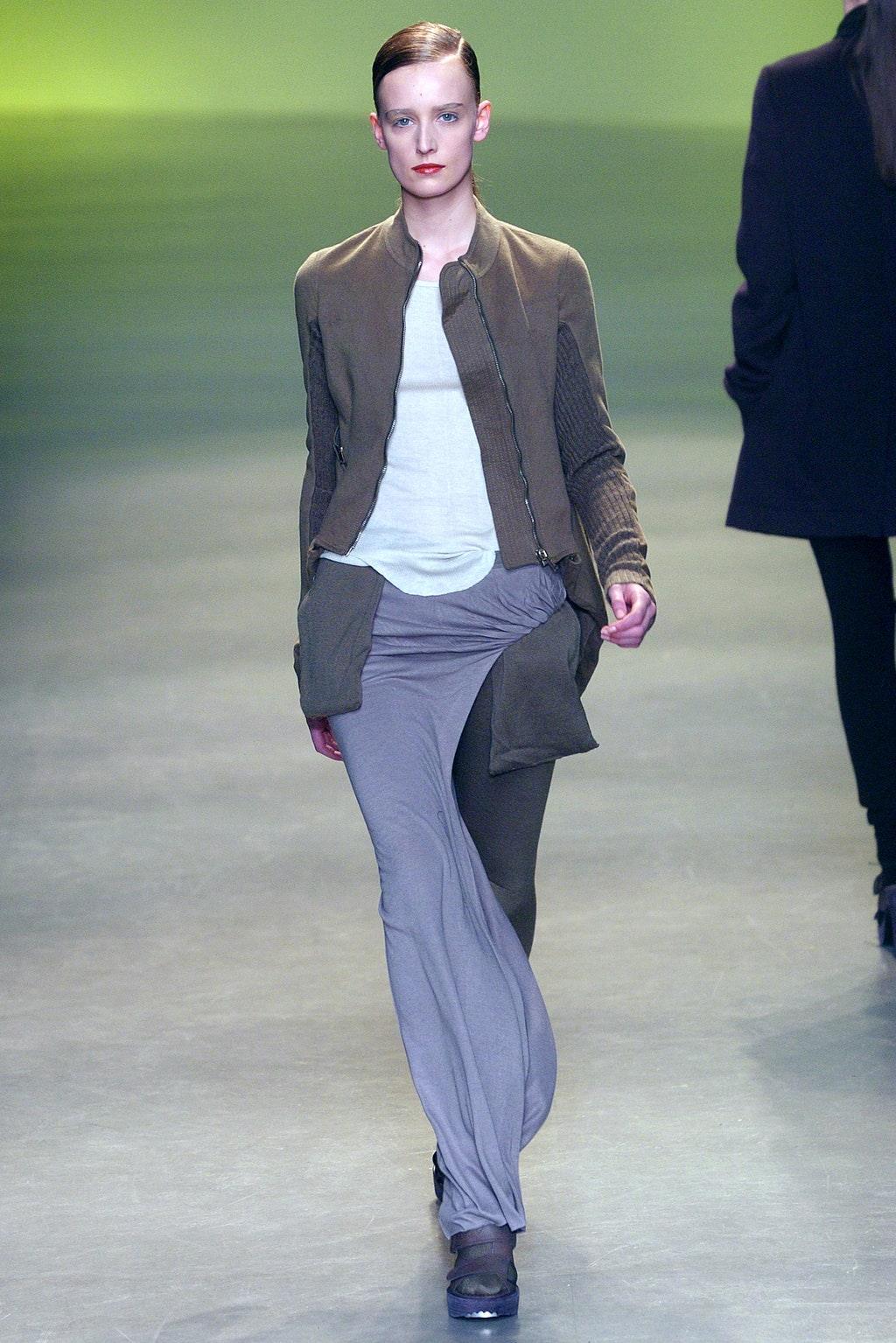

Our dataset comprises 12,147 Rick Owens runway images spanning 23 years (2002-2025) across multiple collections:

Fall Collections: 2002, 2003, 2004, 2008, 2013, 2015, 2016, 2021, 2024

Spring Collections: 2004, 2006, 2007, 2010, 2012, 2013, 2015, 2016, 2017, 2019, 2020, 2023, 2025

Coverage: Menswear, Ready-to-Wear, Beauty campaigns

Processing performed on M4 Mac with Apple Silicon GPU. Embeddings stored in Pinecone vector database for similarity search. All models implemented using PyTorch with transformers library.

Impostor Detection

Task: From pixels only, identify if candidates belong to the same designer and season/look as the query. Good behavior is uncertainty on impostors.

Models see no brand text. They must match designer + season/look from pixels alone. SigLIP is properly uncertain on B and C; CLIP/DINOv2 are overconfident.

Family Recognition

Task: Given one look from a season, find other looks from the same designer and season (collection cohesion).

SigLIP maintains season purity (63.5%); CLIP/DINOv2 mix in other seasons (e.g., Fall 2016).

Needle Search

Task: Find the exact same look in a large database (designer + season + look must match).

SigLIP finds the exact Fall 2004 look; other models are distracted by near neighbors from 2008/2013.

Uncertainty Detection Performance

+0.079

SigLIP

-0.015

CLIP

-0.070

DINOv2

Only positive values indicate proper uncertainty detection

Collection Purity Results

63.5%

SigLIP

48.8%

CLIP

48.6%

DINOv2

Percentage of retrieved images from correct collection

Processing Speed Comparison

1.9min

CLIP

2.7min

DINOv2

18.7min

SigLIP

Designer Gauntlet Test (50 comparisons) • SigLIP's 10x processing cost delivers superior accuracy

SigLIP Embedding Generation

from transformers import AutoModel, AutoProcessor

import torch

# Load SigLIP model

siglip_model = AutoModel.from_pretrained(

"google/siglip-so400m-patch14-384",

torch_dtype=torch.float16

).to("mps") # Apple Silicon GPU

# Generate embedding

image = Image.open("rick-owens-fall-2011-menswear-39.jpg")

inputs = siglip_processor(images=image, return_tensors="pt")

with torch.no_grad():

image_features = siglip_model.get_image_features(**inputs)

embedding = image_features / image_features.norm(dim=-1, keepdim=True)

Vector Search Implementation

async def identify_image(self, image_embedding: List[float]):

"""Find most similar runway image using cosine similarity."""

# Query Pinecone vector database

query_result = self.img_index.query(

vector=image_embedding,

top_k=1,

include_metadata=True,

filter={"file_type": "look"} # Only runway looks

)

# Calculate confidence

similarity_score = match.score

if similarity_score >= 0.95:

confidence = 99.0 # Near-perfect match

elif similarity_score >= 0.90:

confidence = 95.0 # Strong match

| Model | Uncertainty Detection | Collection Purity | Needle Precision | Processing Speed |

|---|---|---|---|---|

| SigLIP | +0.079 gap (PASS) | 63.5% purity | 100% accuracy | 18.7min (10x cost) |

| CLIP | -0.015 gap (FAIL) | 48.8% purity | 90.0% accuracy | 3.1s |

| DINOv2 | -0.070 gap (FAIL) | 48.6% purity | 71.4% accuracy | 4.2s |

Key Finding

This proves current embedding models can detect fashion changes and be applied to track users' closet items and power enterprise-level inventories.

"SigLIP's 9.6x processing cost delivers production-ready accuracy for luxury fashion authentication."

Only SigLIP demonstrates positive uncertainty detection (+0.079 gap), correctly identifying when impostor designs don't belong. CLIP and DINOv2 show dangerous overconfidence with negative gaps, making them unsuitable for high‑stakes fashion authentication where accuracy matters more than speed.

Part of the Caeliai Genesis research series investigating computer vision approaches to fashion and design understanding.